- #How to install pyspark with pip how to

- #How to install pyspark with pip mac os

- #How to install pyspark with pip install

- #How to install pyspark with pip driver

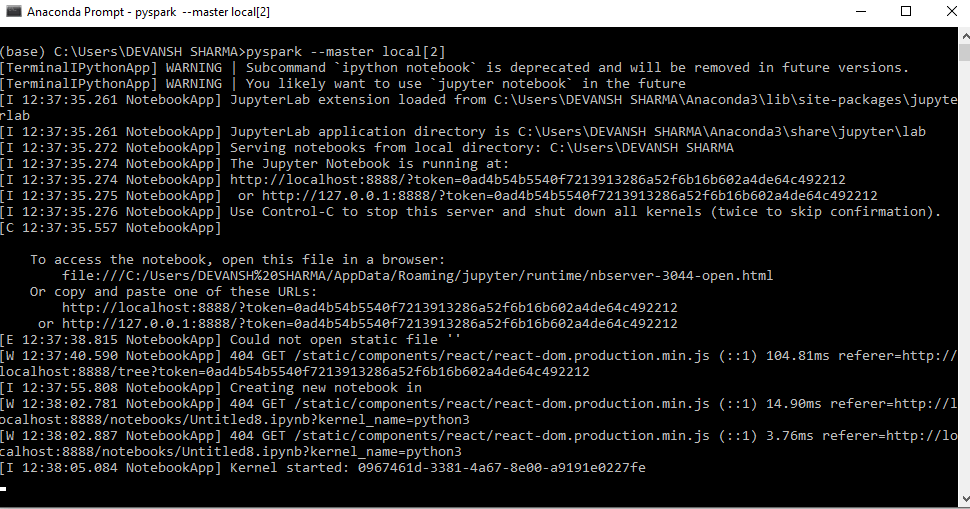

#How to install pyspark with pip how to

In summary, you have learned how to import PySpark libraries in Jupyter or shell/script either by setting the right environment variables or installing and using findspark module. If you have a different Spark version, use the version accordingly.

#How to install pyspark with pip install

In Spark 2.1, though it was available as a Python package, but not being on PyPI, one had to install is manually, by executing the setup.py inSet PYTHONPATH=%SPARK_HOME%/python %SPARK_HOME%/python/lib/py4j-0.10.9-src.zip %PYTHONPATH% Since Spark 2.2.0 PySpark is also available as a Python package at PyPI, which can be installed using pip.

1 pip3 install -trusted-host -trusted-hostSet SPARK_HOME=C:\apps\opt\spark-3.0.0-bin-hadoop2.7 1 pip install -trusted-host -trusted-host pip setuptools Or if you are installing python3-pip then use the following command.

Set these on the Windows environment variables screen. bashrc file and re-load the file by using source ~/.bashrc 3.3 Windows PySpark environmentįor my windows environment, I have the PySpark version spark-3.0.0-bin-hadoop2.7 so below are my environment variables. On Mac I have Spark 2.4.0 version, hence the below variables.Įxport SPARK_HOME=/usr/local/Cellar/apache-spark/2.4.0Įxport PYTHONPATH=$SPARK_HOME/libexec/python:$SPARK_HOME/libexec/python/build:$PYTHONPATH

There can be a number of reasons to install Jupyter on your local computer, and there can be some challenges as well. In the scripts folder, execute the following command to pull the get-pip. In this article you learn how to install Jupyter notebook, with the custom PySpark (for Python) and Apache Spark (for Scala) kernels with Spark magic, and connect the notebook to an HDInsight cluster. Python2.7 the latest version of PIP installation file get-pip.py can be obtained by streaming. Here you can use scripts to automatically download the highest supported version of PIP. pip install pyspark: Example 2: Details of PySpark.

#How to install pyspark with pip mac os

bashrc file and re-load the file by using source ~/.bashrc 3.2 Mac OS Python2 is no longer supported after PIP 2.1. We have to use the pip command to install the PySpark module in the Python shell. Please consider the SparklingPandas project before this one. install spark on google colabgoogle colab install python packageinstall dependency google colabpip install colabconda install pysparkgoogle colab python. Tools and algorithms for pandas Dataframes distributed on pyspark. This way, jupyter server will be remotely accessible. In this tutorial we will learn how to install and work with PySpark on Jupyter notebook on Ubuntu Machine and build a jupyter server by exposing it using nginx reverse proxy over SSL. 3.1 Linux on UbuntuĮxport SPARK_HOME=/Users/prabha/apps/spark-2.4.0-bin-hadoop2.7Įxport PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/build:$PYTHONPATH pip install pyspark-pandasCopy PIP instructions. Installing PySpark with Jupyter notebook on Ubuntu 18.04 LTS. bin/pyspark -master yarn-client -conf -conf.

#How to install pyspark with pip driver

In the Spark driver and executor processes it will create an isolated virtual environment instead of using the default python version running on the host. Then in your /. The following command launches the pyspark shell with virtualenv enabled. First create a directory of storing spark. After setting these, you should not see No module named pyspark while importing PySpark in Python. How to install pyspark locally Download and configure spark.

If you need more information on how to import PySpark in the Python Shell, then you may have a look at the following YouTube video of Krish Naik’s YouTube channel.Now set the SPARK_HOME & PYTHONPATH according to your installation, For my articles, I run my PySpark programs in Linux, Mac and Windows hence I will show what configurations I have for each. # creating a dataframe from the given list of dictionary getOrCreate ( ) #create a dictionary with 3 pairs with 8 values each #inside a listĭata = # creating sparksession and then give the app name How to Get Started with PySpark Start a new Conda environment.

# import the sparksession from pyspark.sql module from pyspark. PySpark is a Python API to using Spark, which is a parallel and distributed engine for running big data applications. # import the pyspark module import pyspark

0 kommentar(er)

0 kommentar(er)